Series: How to Choose an FPGA Chip - Part 1.4: Embedded Resources and SoC Integration — Extending FPGA Capabilities

2025-09-28 10:59:26 1518

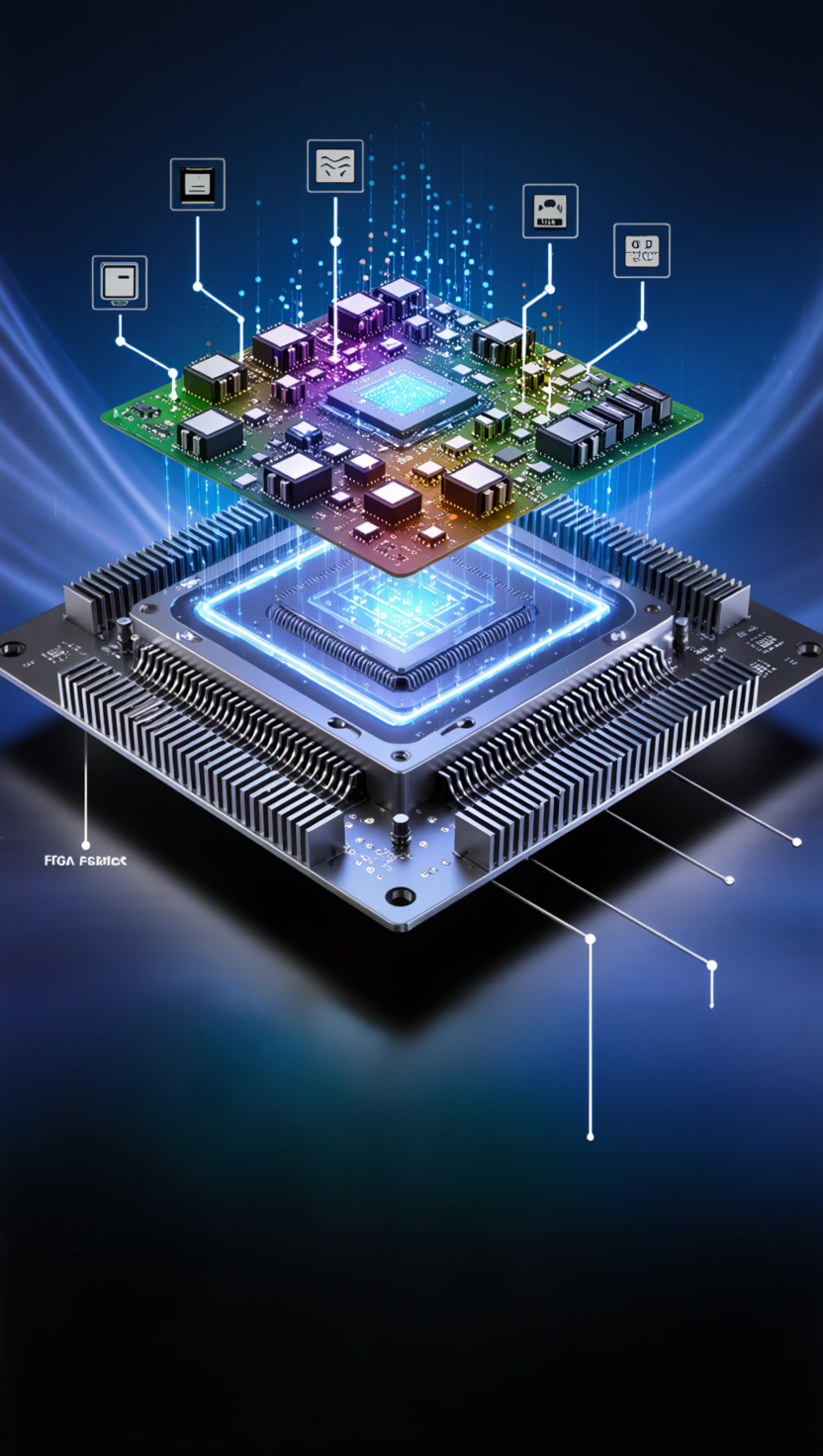

Part 1.4: Embedded Resources and SoC Integration — Extending FPGA Capabilities

Modern FPGAs are more than just arrays of LUTs and flip-flops. Embedded resources such as ARM cores, DSP slices, NPUs, and hardware accelerators transform them into hybrid computing platforms. For engineers, these integrations reduce external component count and speed up system performance. For managers, they affect BOM cost, licensing, and supply choices.

1. FPGA + ARM Cortex Integration

- Engineer’s View: Devices like Xilinx Zynq or Intel SoC FPGAs integrate ARM Cortex-A/R/M cores. This allows running Linux or real-time operating systems alongside FPGA logic, reducing the need for an external MPU or MCU. It also simplifies heterogeneous workloads where software and hardware must coexist.

• Manager’s View: Integration reduces overall BOM cost and PCB complexity. However, SoC FPGAs often carry higher ASP and licensing fees. Lifecycle considerations depend on both FPGA and processor support.

2. DSP, NPU, and Hardware Accelerator Blocks

- Engineer’s View: DSP slices are critical for math-heavy tasks like signal processing and AI inference. Modern FPGAs also integrate NPUs or hardened AI engines (e.g., Xilinx Versal AI Engine). These blocks provide deterministic acceleration for CNNs, FFTs, or cryptography.

• Manager’s View: Hardened accelerators improve performance-per-Watt and reduce time-to-market. But they also tie the design more closely to one vendor’s ecosystem, increasing vendor lock-in risk.

• Examples: AMD/Xilinx Versal AI Core, Intel Agilex with DSP-rich fabric.

Comparative Table: Embedded Resources in Modern FPGAs

|

Embedded Resource |

Function |

Use Cases |

FPGA Families |

Business Impact |

|

ARM Cortex Cores |

Run OS, control logic |

Embedded systems, edge computing |

Xilinx Zynq, Intel SoC FPGAs |

Higher ASP, BOM reduction |

|

DSP Slices |

Math acceleration |

Signal processing, motor control |

Xilinx Artix-7, Intel Cyclone 10 |

Improved efficiency, stable cost |

|

NPU / AI Engines |

AI/ML acceleration |

CNN inference, NLP models |

Xilinx Versal AI Core |

Vendor lock-in, premium pricing |

|

HBM Integration |

High-bandwidth memory |

AI training, networking |

Intel Stratix 10 MX, Xilinx Virtex UltraScale+ |

High ASP, long lead times |

Case Studies

Case Study 1: Embedded Vision System with Zynq SoC

Challenge: A surveillance system required real-time video analytics and network control.

Solution: Xilinx Zynq SoC integrating dual Cortex-A9 cores and FPGA fabric.

Result: Combined image recognition in FPGA with Linux-based control on ARM cores.

Manager’s Perspective: ~$80 ASP, but eliminated the need for a separate MCU, reducing BOM.

Case Study 2: AI Inference at the Edge

Challenge: A medical imaging company needed low-latency CNN inference on portable devices.

Solution: Xilinx Versal AI Core with hardened AI Engines.

Result: Achieved 5× better inference performance-per-Watt vs. GPU solutions.

Manager’s Perspective: ASP ~$2000, higher upfront cost but reduced TCO through efficiency.

Case Study 3: Networking Accelerator with HBM Integration

Challenge: A hyperscale operator needed terabit-scale packet buffering.

Solution: Intel Stratix 10 MX with integrated HBM.

Result: Delivered >400 GB/s memory bandwidth.

Manager’s Perspective: ASP ~$2500, with limited supply; multi-year contract needed.

Conclusion

Embedded resources and SoC integration redefine FPGA selection. For engineers, they simplify design and enable hybrid workloads. For managers, they reduce BOM but increase ASP and vendor dependency. The right decision requires weighing integration benefits against flexibility and long-term support risks. As FPGAs continue to evolve, embedded resources will be a decisive factor in bridging hardware performance with software adaptability.